Comparing Municipal Policies in Boston MA, San Jose CA, Seattle WA and Tempe AZ

A

s Artificial Intelligence, particularly generative AI, becomes increasingly integrated into our daily lives, the need for responsible and transparent guidelines becomes paramount. Several U.S. cities, including Boston, MA; San Jose, CA; Seattle, WA; and Tempe, AZ, have published public guidance documents to their respective city employees. In this blog post, we’ll compare these municipal policies and discuss crucial elements for local governments to consider as they lean into issuing guidance to their employees on the potentials and pitfalls of generative AI.

AI Policy Snapshots

These city policies on generative AI reflect a growing awareness of the potential impact, risks and benefits of the technology. They highlight the need for cities to actively engage with AI technologies, establish guidelines and collaborate with stakeholders to ensure responsible and ethical AI deployment. As AI continues to shape our cities and daily lives, city-led policies can serve as critical guides toward a more equitable and resident-centered future, tapping into AI’s potential while safeguarding the well-being of communities.

Boston’s Responsible Experimentation

Boston’s interim guidelines on generative AI emphasize responsible experimentation. The policy encourages city staff to fact-check AI-generated content, disclose AI use in public-facing content and written reports, and avoid the sharing of sensitive information with AI systems. The city also acknowledges that generative AI, like all technology, is a tool, and users remain accountable for its outcomes.

San Jose’s Transparency Efforts

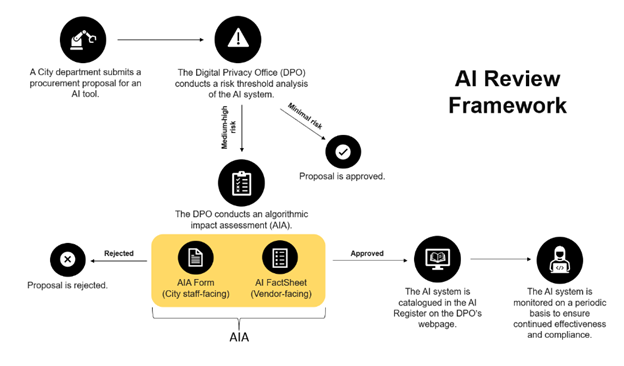

San Jose has published Generative AI Guidelines that are regularly updated in response to changing laws, technologies and best practices. Employees must record their use of generative AI through the city’s generative AI reporting form and are encouraged to join AI working groups to help enhance the city’s guidelines. The city also maintains an Algorithm Register to review and approve of all AI systems in use. Their AI review framework mandates that all algorithmic systems must be assessed by the city’s Digital Privacy Office.

Seattle’s Procurement and Accountability

Seattle’s interim policy mandates that all software, even free or pilot products, must be submitted for approval through the city’s procurement process. The policies also address intellectual property, attribution, reducing bias and harm, data privacy, and public records. Seattle IT plans to collaborate with stakeholders to research future policy implications, indicating a commitment to continual improvement.

Tempe’s Ethical Governance

Tempe’s Ethical Artificial Intelligence Policy facilitates collaboration between all departments and IT for AI reviews, including semi-annual evaluations of AI solutions. The policy establishes a Technology and Innovation Steering Committee to oversee monitoring, reporting, public awareness and non-compliance measures. Additionally, it directs IT to create AI review processes and provide training programs to promote AI literacy, ethics, privacy protection and responsible AI practices among employees involved in AI solutions.

AI Review Framework, City of San Jose

Analysis and Recommendations for Cities

Establish Mechanisms for AI Governance

Generative AI can produce content autonomously, making accountability essential. Policies ensure that municipal staff are responsible for its outcomes. Cities can introduce mechanisms for AI governance, allowing various levels of scrutiny to review employee AI use. From self-reporting experiences, as seen in Boston, to comprehensive reviews like San Jose’s, or authorizing AI use through the city’s acquisition process, as in Seattle, different approaches can suit different needs. Further, generative AI produces content based on user-issued prompts and data, raising concerns about the potential misuse of resident’s personal and sensitive information. Policies exist to protect the privacy of residents and define consequences for mishandling this data.

“Generative AI is a tool. We are responsible for the outcomes of our tools. For example, if autocorrect unintentionally changes a word – changing the meaning of something we wrote, we are still responsible for the text. Technology enables our work, it does not excuse our judgment nor our accountability.”

Santiago Garces, CIO, Boston

Improve AI Literacy

Providing training and resources for city staff is essential, ensuring they are well-versed in AI literacy, ethics, privacy protection, and responsible AI practices. Similar to Boston, cities can provide examples of practical use cases to help employees understand AI’s potential applications in their daily work. Furthermore, following Tempe’s lead, cities should proactively engage the public in discussions regarding the ethical use and governance of AI. City policies can also improve resident’s understanding of AI by disclosing AI use and maintaining a register of AI systems. This transparency can build trust among residents and stakeholders and encourage future exploration of emerging technologies to solve real work problems.

Review and Adapt Your Policy

It is imperative to acknowledge that AI policy must evolve alongside our evolving understanding of its impact, benefits, and potential harm. To achieve this, consider establishing a review committee, audit process, or working groups tasked with iteratively assessing your city’s AI needs. This ensures that your policies remain adaptive and relevant in a rapidly changing landscape. In addition, like San Jose, cities can set baseline standards for all employees to follow and allow departments to implement additional guidelines to suite their respective needs.

Address Specific Stakeholders

AI policies can offer clear directives to city staff, mirroring Tempe’s specific mandates for IT to create and complete AI reviews. By addressing specific stakeholders, policies become more actionable and effective in guiding responsible AI use within the city.

In addition, city AI policies should pay close attention to:

- Data Privacy: Policies should safeguard residents’ sensitive information and establish stringent guidelines for data ownership and sharing, ensuring that privacy remains a top priority.

- Bias Mitigation: AI-powered tools, particularly those utilizing Natural Language Processing, rely on datasets that may contain harmful biases. City policies should focus on detecting these biases and eliminating misinformation in AI-generated content to ensure fairness and safety.

- Community Benefit: Generative AI has the potential to significantly benefit resource-constrained governments, aiding in coding, data analysis, and producing public communications in plain language. Policies should emphasize the importance of AI systems benefiting the wider community, aligning technology use with the city’s broader goals and values.

By considering these key aspects and recommendations from existing municipal guidance, cities, towns, and villages can develop and refine their AI policies to navigate the complex terrain of generative AI while upholding ethical standards and promoting responsible usage for the well-being of their communities.

Stay up to date with our Urban Innovation work online or get in touch with us at innovation@nlc.org. Click here learn more about becoming an NLC member and accessing our full catalog of municipal resources.